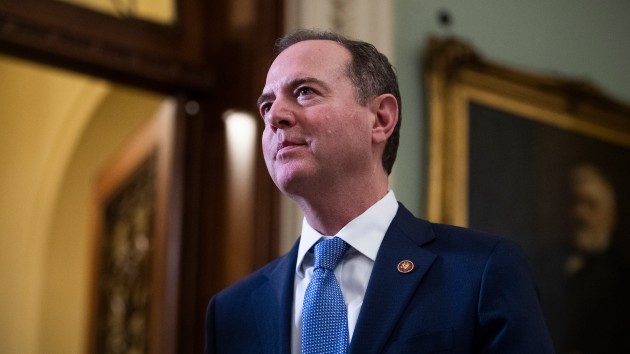

Tom Williams/CQ-Roll Call, Inc via Getty ImagesBy CATHERINE SANZ, ABC News

(WASHINGTON) — Google and YouTube are deliberately not disclosing misinformation on their platforms to avoid the scrutiny leveled at other social media companies, California lawmaker Adam Schiff said Monday.

Schiff, chairman of the House Intelligence Committee, said Google, which owns YouTube, seemed to rely on a policy of revealing “as little as possible” to prevent being criticized for inaction on misinformation. He was comparing this to the way other platforms have disclosed misinformation or revealed disinformation tactics intercepted by moderators.

“If they [Google] don’t discuss it or reveal it, then there is no problem,” he said.

Schiff was speaking as part of a forum on the consequences of digital platforms’ “misinformation negligence” hosted by George Washington University’s Institute for Data, Democracy and Politics (IDDP). Monday’s forum was focused on misinformation that misleads voters and hurts election integrity.

He joins a growing number of Democrats who have spoken critically of social media companies in recent weeks, including House Speaker Nancy Pelosi, who spoke at the forum earlier this month.

His criticism of Google comes as a new report from the Tech Transparency Project (TTP) found that Google was pushing scam ads on Americans who searched how to vote. TTP found that search terms like “register to vote,” “vote by mail” and “where is my polling place” generated ads linking to websites that charge bogus fees for voter registration, harvest user data or plant unwanted software on people’s browsers.

Schiff, who held a virtual hearing with representatives of the major social media platforms earlier in June, said he did not believe all companies were moving in the right direction regarding tackling and preventing misinformation.

“I do get a sense that there is something going on at Twitter,” he said, adding that he feels like senior employees there have “reached their last straw” regarding misinformation and election interference tactics.

“I still get the sense that Facebook will need to be pulled and dragged into this era of corporate responsibility, the economic incentives are simply too powerful for continuation of the status quo,” he said.

When asked what could be done to prevent social media misinformation influencing the 2020 election, Schiff hinted at removing “immunity” granted to social media platforms under Section 230 of the Communications Decency Act. This law, introduced in 1996, protects online platforms from liability of user generated content. Schiff made similar comments last summer after speaking out on the dangers of deepfakes, or AI-manipulated videos.

“The kind of wild west we have online right now is breeding rampant disinformation, division within our own society, and poses a real threat to democracies around the world,” he added.

Also speaking at the forum was Stacey Abrams, former Democratic leader of the Georgia House of Representatives and the founder of Fair Fight, an organization that combats voter suppression and defends voting rights.

Abrams said she thought there was more attention given to misinformation on Facebook because its CEO Mark Zuckerberg “put a target on his back” by speaking publicly about the issue. She was referring to Zuckerberg maintaining a Facebook policy of not policing or moderating political ads which contain false information. He said last week that users would soon be allowed to turn off the ads if they do not want to view them.

“While [Zuckerberg] has made some mincing steps toward correction, they have been fully underwhelming,” she said.

A spokesperson for Google directed ABC News to YouTube’s transparency report, which details actions taken on videos, including how many were removed from the platform. A report from January to March 2020 states that YouTube removed over six million videos and nearly 700 million comments from its platform. “Spam” was the top reason for the removal of both video and comments. Other reasons included child safety, nudity or violence.

“We have strict policies in place to protect users from false information about voting procedures, and when we find ads that violate our policies and present harm to users, we remove them and block advertisers from running similar ads in the future,” Google said in a statement.

Facebook has said it is working to combat misinformation with a number of measures, including “connecting people to credible information” and investing in a global fact-checking program.

Copyright © 2020, ABC Audio. All rights reserved.